Saturday, March 1, 2025

"Spiral of Silence" the pitfall of AI Search: What is Social Intelligence? And why it is the future?

"Spiral of Silence" the Pitfall AI Search:

What is Social Intelligence? And Why It Is the Future?

Imagine logging onto your favorite forum or social feed, eager to discuss a controversial topic. You hesitate. The loudest voices all seem to share only a few opinions, and it’s not yours. Do you speak up, or stay silent? In today’s digital world, many of us have felt this tension. We’re swimming in a sea of information, yet it often feels like we’re hearing the same few voices over and over. Why do so many people hold back their true thoughts online? And how is the rise of AI – especially those helpful large language models (LLMs) like ChatGPT – potentially making this problem worse? These questions are at the heart of the “spiral of silence” phenomenon and point toward a solution grounded in social intelligence.

When AI Search (Answer Engine) becomes an Echo Chamber

Enter the age of AI assistants and advanced LLMs, which promise quick answers to any question. It’s a marvel of technology – ask a question and get a coherent answer in seconds. But there’s a hidden risk: if millions of people are relying on the same AI models trained on the same giant pool of internet text, will we start to get the same answers? Will we lose the nuanced, quirky, local, or expert viewpoints that make human knowledge so rich?

It’s not just a hypothetical concern. Research is already noting a worrying trend: as different users incorporate suggestions from the same AI model, there is a “risk of decreased diversity in the produced content, potentially limiting diverse perspectives in public discourse”. In one study, writers asked to co-write essays with an AI assistant ended up with more homogenized essays – using a particular LLM led to “a statistically significant reduction in diversity” of content, making different authors sound more similar. Essentially, the AI’s voice started to drown out the writers’ individual voices. Another team of researchers compared creative answers from humans and various AI models and found that AI-generated responses were much more alike each other than human responses were, even across different brands of AI. If today’s LLMs are all trained on the same internet texts and tuned in similar ways, using them widely could funnel us into a narrow range of expressions. We could unwittingly create a massive echo chamber, where the human breadth of ideas is filtered through a single, artificial lens.

Why does this happen? Part of the reason is how these AI models work. They’re trained to predict likely responses based on patterns in their data – which means they often converge on the most statistically “average” answer. One AI industry analysis bluntly noted that such systems “show a strong bias towards majority cultures, perspectives, and modes of thinking”, and as more AI-generated content floods online, future models will be trained on it, further entrenching majority views and creating more homogeneous content. In a worst-case scenario, if each new generation of AI learns from content produced by the previous generation, we get a feedback loop of sameness. (AI researchers have a term for this meltdown of diversity – “model collapse”, where the model’s performance degrades because it’s drinking its own stale bathwater rather than fresh data.)

The spiral of silence could thus acquire a high-tech twist: instead of mass media or peer pressure making one opinion dominant, it could be our go-to AI answers that set the narrative and subtly discourage us from seeking alternatives. If an AI assistant consistently gives a certain viewpoint – drawn from the prevailing wisdom of its training data – users might never encounter the minority perspective at all. Over time, those unvoiced perspectives fade further, much like the deviant opinions in Noelle-Neumann’s corkscrew analogy. The information retrieval process itself starts to bias what we see. Even search engines are integrating AI summaries on top of search results, which, while convenient, can “choke off traffic and attribution” to the diverse sources of information on the open web. Why click through to five different forums or news sites when the AI blurb already gives an answer? The result: our information diet becomes less varied, and authentic voices on those source sites get less engagement.

Theory : The Spiral of Silence and the Fear of Speaking Up

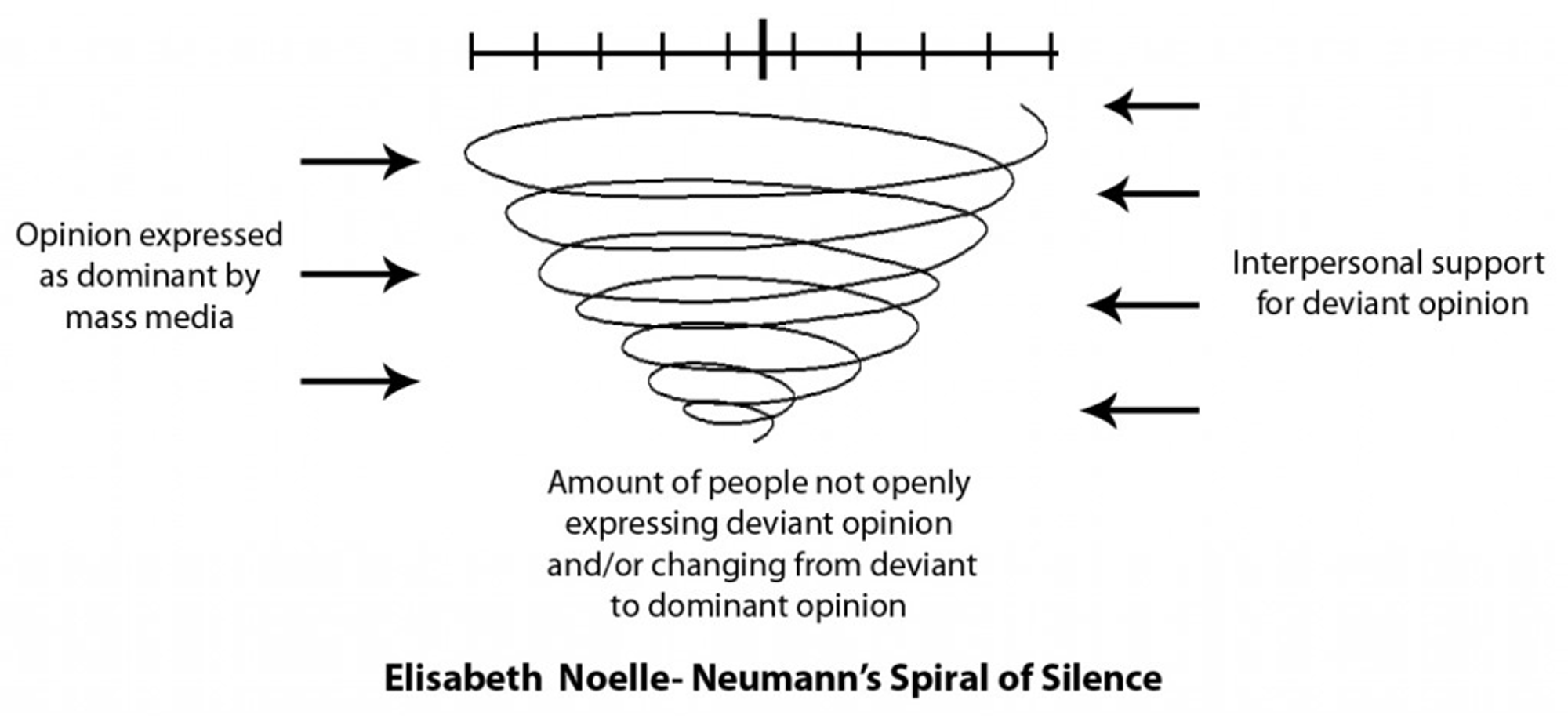

In the 1970s, German political scientist Elisabeth Noelle-Neumann coined the term Spiral of Silence to describe a powerful social force. Her theory refers to “the increasing pressure that people feel to conceal their views when they think that they are in the minority”. In simple terms, if you believe your opinion isn’t popular, you’re more likely to stay quiet. This self-censorship is driven by a very human fear: the fear of isolation or ostracism. Noelle-Neumann found that people have a quasi-statistical sense of the climate of opinion around them – we’re constantly scanning our social environment to gauge which views are safe to express. If your view seems unpopular, the “centrifugal force” of fearing social isolation kicks in. You bite your tongue, the majority opinion grows ever louder, and the minority view sinks further out of sight in a self-perpetuating spiral.

We’ve all seen this play out. On a social network, a bold stance gets wide approval and rises to the top, while opposing voices retreat for fear of being ridiculed or attacked. Over time, one viewpoint appears unanimous – not necessarily because everyone agrees, but because those who don’t have effectively been silenced. Philosopher John Stuart Mill warned about this danger long before the internet. In On Liberty, Mill argued that even if all of society minus one person shared the same opinion, silencing that one dissenter would be an injustice. That lone voice could hold a piece of the truth. In other words, diversity of thought isn’t just nice to have – it’s essential for us to inch closer to truth and understanding. The spiral of silence is a pitfall that robs us of that diversity.

The Algorithmic Conformity Crisis

The Political and Philosophical Implications

Throughout history, control over information has been a fundamental mechanism of power. From the Catholic Church’s censorship in the Middle Ages to the state-controlled media in authoritarian regimes, controlling narratives dictates societal behavior. In the 20th century, mass media structured public discourse; today, social media algorithms and AI-generated content wield even greater influence, subtly shaping what we perceive as truth.

The philosopher Michel Foucault’s Discipline and Punish discusses how information structures society—knowledge is power, and those who control discourse shape reality. Social media platforms claim to be neutral intermediaries, yet their algorithms function as gatekeepers, amplifying certain voices while silencing others. When AI-generated content becomes the default source of information retrieval, we enter a new phase of digital hegemony, where diversity of thought erodes beneath the weight of self-referential machine-generated narratives.

Hannah Arendt’s The Origins of Totalitarianism warned against the dangers of manufactured consensus—when individuals believe they are exposed to an objective reality but are in fact subject to engineered truths. This is the essence of today’s AI-driven information retrieval dilemma. Search engines, once designed to surface the most relevant or authoritative information, now risk devolving into self-reinforcing loops of AI-generated content.

The Social Science Perspective

Sociologist Jürgen Habermas emphasized the importance of the public sphere—a space where diverse voices engage in reasoned discourse to shape collective understanding. However, today’s social media-driven information landscape is fracturing this sphere, leading to algorithmic filter bubbles and reinforcing ideological echo chambers. Instead of fostering debate, AI-driven search results prioritize engagement metrics, often amplifying polarizing, sensationalist, or lowest-common-denominator content.

This is why Currents is not building another AI answer engine, like Perplexity. Instead, we are focused on retrieving real user voices—the messy, diverse, and valuable human-generated content that algorithms often neglect. By prioritizing long-tail UGC (user-generated content), we ensure that niche communities—your customers, your unique audience—are not drowned out by AI-generated noise.

Social Intelligence: The Future of AI Search and Business Growth

At its core, Social Intelligence is about understanding the human digital footprint in real-time. Unlike conventional AI search engines that simply provide direct answers, Social Intelligence engines analyze vast amounts of user-generated content to extract trends, insights, and emerging narratives. This is crucial for businesses, especially in marketing, product development, and competitive intelligence.

Case Study: Finding Opportunity in Negative Signals

Many businesses focus solely on positive reviews and competitor successes. However, some of the most valuable insights come from negative reviews and customer complaints—the signals that reveal gaps in the market. A logistics expert in cross-border e-commerce, with 20 years of experience, told us:

“The biggest untapped opportunity is analyzing negative feedback. Most Chinese e-commerce sellers are price-driven, but they lack direct insights into overseas consumer frustrations. If we can systematically mine negative reviews, we can identify critical weaknesses in existing products and design superior alternatives. That’s how you differentiate, not just by undercutting on price.”

This is precisely where Currents’ Social Intelligence Engine comes into play. Instead of relying on AI-generated content to define market trends, we extract real pain points, raw customer frustrations, and untapped demand from authentic user conversations.

The Cost Revolution: Democratizing AI-Driven Intelligence

The recent rise of cost-effective AI models, like DeepSeek, is a game-changer. AI applications that were once constrained by data scale—such as massive data mining for social insights—are now viable at 1/10th the cost. This means AI-powered business intelligence, once a luxury for Fortune 500 companies, is now accessible to small businesses and startups. Currents is at the forefront of this transformation, ensuring that even niche communities have a voice in shaping the future of commerce and innovation.

The Next Era: AI as a Trusted, Context-Aware Partner

We believe the next frontier of AI is not just search, but trusted, social intelligence. The ultimate AI is not one that merely answers queries, but one that deeply understands human context—an AI that remembers, predicts, and even empathizes with user interactions over time. This is where AGI (Artificial General Intelligence) must evolve: into a system that adapts dynamically to real human experiences rather than feeding on its own generated content.

Currents is pioneering this shift. We are building an AI-driven ecosystem that engages, gathers, and supports real communities—your customers, your audience—turning them into active participants in product and brand evolution. By fighting against algorithmic suppression, we are ensuring that the voices that matter most are heard. And in doing so, we are fueling the future of intelligent, human-centric AI.

In a world drowning in AI-generated noise, the key to success is not just better answers. It’s better questions—and better ways to find the people who are asking them.

As an individual, how to breaking the Spiral?

Embracing social intelligence is our best chance to break free from the spiral of silence in the digital era. By designing AI tools to elevate diverse perspectives, we counteract the homogenizing forces that plague online discourse. The future of information shouldn’t be an AI monologue; it should be a rich dialogue where AI plays the role of facilitator and fact-finder. We at Currents believe that technology should empower the plurality of voices, not compress them into one noise. Every time an AI helps surface a brilliant insight from a quiet corner of the internet, the spiral is unwinding. Every time a previously unheard perspective finds its audience, the collective intelligence of our society grows stronger.

The stakes are high. The value of authentic discourse – for democracy, for innovation, for mutual understanding – cannot be overstated. We stand at a crossroads where we can either slide into an information landscape dominated by self-referential AI chatter, or we can use AI in a wiser way to champion human-centric knowledge. The choice is ours to make, collectively. It’s not about rejecting AI, but about steering it: towards openness, diversity, and truth.

As you read this, you are part of the solution. By being aware of the spiral of silence, by seeking out multiple viewpoints, by supporting platforms that prioritize real voices, you help ensure that the future of our online world is not a barren monoculture but a thriving ecosystem of ideas. This is the vision that drives Currents’ mission to build a truly socially intelligent engine for information. It’s time to let every voice be heard. In the end, the antidote to a silenced spiral is a current of diverse voices – and with the right tools, we can make that current flow stronger than ever.

An AI builder that believes in AI (crossed) social connections,

Zoey